Background:

Say you have this C program which fakes a sin waveform output by calculating a floating-point number and then convert it to an integer:

void main() {

unsigned int value;

float signal;

signal=2047.0*(1+sin(2*3.142*count/50.0));

value=signal;

}

We first calculate “signal” which is a floating-point number. Then, we assign “value” to “signal”! note that “value” is an integer number, not a floating-point. Think like if you are an embedded processor, how can you convert a floating-point number to integer? How are floating point numbers represented in memory in the first place?

From a human point of view, it is an easy thing to do. Just take the actual number and throw everything after the decimal point away. However, remember that:

“”

Outline:

- Floating-point numbers.

- IEEE 754 Standard.

- Range of floating-point numbers.

- Precision.

- Other precision formats.

- Conclusion.

Floating-point numbers:

Floating point numbers are stored in a format which does not map well onto the integer registers. A calculation involving this type of number requires a lengthy sequence of individual microprocessor operations and is time-consuming. The alternative approach is to incorporate a floating-point unit within or in parallel with the ordinary processor. This was once confined to advanced microprocessors such as those found in high performance workstations. Nowadays, however, you can get floating point units in microcontrollers such as the ARM Cortex M4.

The format I will describe for storing floating-point numbers was first standardised in around 1985. It was the outcome of many years of careful work to ensure that numerical calculations behaved in:

- A stable way even at the extremes of the range of numbers which could be represented (both very big and very small); and

- A reproducible way on different computers.

Aside fact – The 80×87 Floating Point Coprocessors:

Because of the limitations in the technology of silicon fabrication in the late 1970’s, floating point capability could not be integrated onto the 8086 chip. The 8087 was a separate chip, a coprocessor, which operated in parallel with the 8086.

IEEE 754 Standard for floating-point numbers:

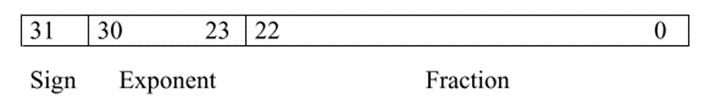

Number = (-1)Sign * [1+ Fraction]*2Power

Let’s discuss this formula:

- It is in a manner similar to standard scientific notation in base 10. Eg. 1.60 * 10-19. 1.60 is called the Mantissa and -19 is called the Exponent.

- Sign will determine if the number is negative or positive. If Sign = 0/1, number = +ve/-ve.

- Fraction = (bit 22)*2−1 + (bit 21)*2−2 + (bit 20)*2-3 + … + (bit 0)*2−23. ie. MSB = ½, next bit =1/4, next bit = 1/8, etc.

- We always have 1 added to fraction in binary mode, we do not start at 0.

- Power = Exponent – 127; where Exponent = Unsigned binary integer which, for ordinary numbers, can take on values in the range 1 to 254 (0 and 255 are reserved for special cases. I explained why in this section)

If this is confusing, that is alright. See this example to better understand where these numbers come from:

Say we have this number stored in memory: 0x0000A040

Separate every byte: 00 00 A0 40

Remember, in little endian machines, this value is actual stored flipped in memory. So, flip it back, we will get: 40 A0 00 00

Convert 0x40A00000 to binary (A byte at a time):

| 40 | A0 | 00 | 00 | |||

| 0100 0000 | 1010 0000 | 0000 0000 | 0000 0000 |

Take 1 bit for the Sign, 8 bits for the Exponent (From the formula):

| Sign | Exponent | Fraction | ||

| 0 | 100 0000 1 | 010 0000 0000 0000 0000 0000 |

Sign = 0: number is positive

Exponent = 1000 00012 = 12910

Power = Exponent – 127 = 129 – 127 = 210

Fraction = 010 0000 0000 0000 0000 00002 = 1/4 (MSB is 1/2, second bit 1/4, etc)

∴ Number = (-1)Sign * [1+ Fraction]*2Power

= (-1)0 * [1+ 1/4] * 22

= 5.0

To go the other way round:

Say want to store 5.0 in memory:

First, what is the biggest 2power is this? It is an easy one: take power = 2 because 22=4 but 23=8 (bigger than 5).

And power = Exponent -127. So, Exponent = 129

Sign = 0 (because 5.0 is positive)

Number = (-1)Sign * [1+ Fraction]*2Power

So, 5.0 = (-1)0 + (1 + fraction) * 22

By rearranging the equation, we get: (1+Fraction) = 5.0/22 = 5/4 = 1.25. So, Fraction = 0.25 = 1/4.

In binary:

| Sign | Exponent | Fraction | ||

|---|---|---|---|---|

| positive | 12910 | 0.2510 | ||

| 0 | 1000 0001 | 010 0000 0000 0000 0000 0000 |

Note: Fraction = 010 0000 0000 0000 = 0*(1/2) + 1*(1/4) + 0*(1/8) + ….

At the end, number to be stored is:

0100 0000 1010 0000 0000 0000 0000 00002 = 0x40 A0 00 00

Remember, most machine are little endian nowadays. So, data is stored flipped in memory.

Therefore, number to be stored = 0x00 00 A0 40

Why power = Exponent – 127?

From 8 bits exponent, we get 255 distinct values (28 = 255). We keep 0 and 255 for special use; discussed in next section. We left with range from 1 → 254. We want to have positive and negative number, because we want to be able to use both in our calculations of cause. Therefore, we split this range into two: 127 = 254/2. So, power = 1 – 127 = -126 and power = 254 – 127 = +127

∴ range is +127 => -126

When do we use Exponent = 0 or 255?

- If Exponent = 1111 11112 = 255, Number = inf = ∞

- If Exponent = 255 and Sign = 1, Number = -∞

- If Exponent = 255 and if any bit(s) in the Mantissa is 1, Number = Nan (null value)

As you see, these are incredibly useful numbers to have, specially in programming languages where you want to have a huge number (+∞/-∞) or when you want a null value.

What does denormalized mean?

If we put Exponent – 127 = 0000 00002 = 0 (all bits are 0’s), and mantissa = 1111 1111 1111 1111 1111 111, Mantissa = 0.9999…

If Exponent – 127 > 0 or < 0 and mantissa = 1111 1111 1111 1111 1111 111, Mantissa =1.9999…

This means it throws the 1 in 1.999… and keeps the 0.9999…

This is called denormalized. Denormalized numbers are:

- Nonlinear

- Not safe to use – dangerous and unstable

- Not precise

- Different between each smallest bit is very big (unprecise)

Aside fact:

The number 78.8710 is not a number that can be precisely represented in binary.

“What decimal representation is good at, binary is not; And what binary is good at, decimal is not.”

Range of floating-point numbers:

These are standard single precision floating point numbers, other formats available. See Other formats in this section.

- Largest:

When Exponent = 254, we have the largest expression allowed for normal numbers. This means Power = 254 – 127 = 127.

Fraction = 2-1 + 2-2 + 2-3 + 2-4 + … + 2-23 ≈ 1 is the largest fraction.

Then, Number = (1+1) * 2127 ≈ 3.4 x 1038 is the largest number.

- Smallest:

When Exponent = 1, we have the smallest expression allowed for normal numbers. This means Power = 1 – 127 = -126.

Fraction = 0 is the smallest fraction.

Then, Number = (1+0) * 2-126 ≈ 1.18 x 10-38 is the smallest number.

Precision of floating-point numbers:

In single precision:

Sign = 1 bit

Exponent = 8 bits

Fraction = 23 bits

Total length = 32 bits = 4 bytes

Why did we choose these number of bits?

The choices of number of bits to represent the exponent and fraction and the protocols for dealing with very small and very large numbers are not arbitrary. They are the result of careful numerical analysis, which has stood the test of time.

In other words, we have decided to have this set of bits because of nature. For instance, exponent can be up to 2255 = 1076. By taking half of this number so we can get negative numbers too, we get 1038; Which is still a big number. Do we actually need bigger than this range for our daily life calculations? Remember, higher precision will consume more power from the CPU and will need more time to finish. Then, we probably do not need much bigger range. However, our calculators nowadays can do better than this range (≈1099)

“This was a design choice. We chose to have single precision with 8 bits exponent and 23 bits fraction.”

Other precision formats:

| Precision | Single | Double | Extended |

|---|---|---|---|

| Storage (bytes) | 4 | 8 | 10 |

| Exponent (bits) | 8 | 11 | 15 |

| Exponent (bias1) | 127 | 1023 | 16383 |

| Fraction (bits) | 32 | 52 | 64 |

| Fraction (1+ …) | Implicit | Implicit | Explicit |

| Largest | 3.4 x 1038 | 1.8 x 10308 | 1.2 x 104932 |

| Smallest | 1.18 x 10-38 | 2.23 x 10-308 |

Main reason for using double precision (8 bytes) numbers is not to increase range, but to improve precision. In single precision, the smallest di difference between successive floating-point numbers is given by the “worst case”:

Fractional part originally: 1 + 0. Next number: 1 + 2-23.

So, precision is 2-23 in 1. ie. 1 part in 223. Note that 223 = 100.3*23 (2 = 10log(2)) = 107.

∴ Single precision is 1 part in 107.

In double precision: precision is 1 part in 252 = 100.3*52) = 1015.

∴ Double precision is 1 part in 1015.

“Double precision is far more precise than single, but do you need it?”

Conclusion:

- Floating-point numbers need a lengthy sequence of individual microprocessor operations and is time-consuming to handle. They are unpleasant.

- It was not normal to get a processor that can handle floating-point numbers some time ago.

- The IEEE 754 Standard: Number = (-1)Sign * [1+ Fraction]*2Power

- Exponent = 0, Number = ∞, if sign = 1 as well, Number = -∞

- Exponent = 255 + a bit in Mantissa, Number = Nan (null value).

- Largest number from single precision ≈ 3.4 x 1038.

- Smallest number from single precision ≈ 1.18 x 10-38.

- Other precision formats available: Double and Extended

Practice the floating-point representations and conversions in this website. it is a good website to virtualize what is going on when turning on/off every bit individually in the Exponent and Mantissa parts.

Fantastic , well done Jaloool